One of the most basic things that you learn in Haskell is function composition. If we want to call multiple functions in a row, it's very easy to call them all in sequence. Say, for instance, we have some functions for some (hypothetical) users. Perhaps we want to plan a romantic sunset viewing for our users on their birthdays.1 2

data UserID = ...

data User = ...

getUser :: UserID -> User

userBirthday :: User -> Day

sunsetTime :: Day -> TimeOfDayOnce we want to build all of this up into a real program that calculates the sunset time for each user, it's easy enough. Just compose all the functions together.

getUserSunsetTime :: UserID -> TimeOfDay

getUserSunsetTime = sunsetTime . userBirthday . getUserBut there's one thing we've neglected here: error handling! Each of the three constituent functions can potentially fail; maybe a user for a given ID doesn't exist, maybe they haven't set their birthday, or maybe they live somewhere where the sun doesn't set. If something goes wrong, the functions as outlined above would have no choice but to throw an exception or return some junk data.

For a language that prides itself on safety and correctness, that seems pretty painful. So like a good Haskeller, we rewrite our functions to reflect the fact that they might fail in their type, by returning a Maybe.

getUser :: UserID -> Maybe User

userBirthday :: User -> Maybe Day

sunsetTime :: Day -> Maybe TimeOfDaySeems okay, but now we run into another problem: these functions don't compose! We can't pass the return value of one into the next, since the types don't line up. We can implement our new version of getUserSunsetTime by just using pattern matching instead, but when we do:

getUserSunsetTime :: UserID -> Maybe TimeOfDay

getUserSunsetTime uid =

case getUser uid of

Nothing -> Nothing

Just user -> case userBirthday user of

Nothing -> Nothing

Just birthday -> sunsetTime birthdayYuck. We have to constantly check at every step whether we've gotten a Nothing, and the nesting gets deeper and deeper. The actual logic is getting buried underneath a bunch of syntactic noise. You can see how this would get worse and worse the more functions we need to add to this chain as well. We've gotten more reliable error handling, but in exchange it seems like we had to give up the expressiveness of function composition.

The thing is, the pattern matching logic isn't anything hard. We're doing the exact same thing at each level of nesting: check whether the result of the previous function is Nothing, and return Nothing if it is. Otherwise, we call the next function in the chain. Is there a way we can abstract this?

Let's take some inspiration from the composition operator. If we had a function that composed left to right called compose, what we want to write is composeMaybe. Then we could write our new implementation of getUserSunsetTime just as easily as if our components were normal functions!

compose :: (a -> b) -> (b -> c) -> (a -> c)

composeMaybe :: (a -> Maybe b) -> (b -> Maybe c) -> (a -> Maybe c)

getUserSunsetTime :: UserID -> Maybe TimeOfDay

getUserSunsetTime =

(getUser `composeMaybe` userBirthday) `composeMaybe` sunsetTimeNow we just need to implement composeMaybe, and we have what we want: early return, the way we'd have in an imperative language, combined with type safety and the expressiveness of our original code.

composeMaybe f g = \x ->

case f x of

Nothing -> Nothing

Just x' -> g x'Another thing that can trip you up is how to do logging. Since Haskell functions are pure, how do you send events to Sentry or whatever logging service you're using? Even something like printing diagnostics to the console gets thwarted by the type system. Setting up a complete, robust logging solution that interacts with third-party services is something we'll talk about in a later post, but for now, what would a simple solution to this problem look like?

Let's assume that we're okay with getting all diagnostic messages at the end of running our program; we don't need live logging, we can just dump a log file once our program completes. We could handle this by having each function return a string alongside its normal return value.

doubleIt :: Double -> (String, Double)

addTwo :: Double -> (String, Double)

truncateIt :: Double -> (String, Integer)

doubleIt n = ("doubling the value...\n", n * 2)

addTwo n = ("adding 2...\n", n + 2)

truncateIt n = ("truncating floating point...\n", truncate n)The idea is that when we want to call these functions, we get back all the log messages and then it's our responsibility to combine then to produce the full log message to return. Here's how that might look:

doubleAddTruncate :: Double -> (String, Integer)

doubleAddTruncate n =

let (log1, n1) = doubleIt n

(log2, n2) = addTwo n1

(log3, n3) = truncateIt n2

in (log1 ++ log2 ++ log3, n3)This works fine, but you can see that it's not exactly pretty. If you had to write an entire program like this, it would quickly become a mess of repetitive and error-prone code to assemble the log messages. We've run into the same problem: our functions don't compose cleanly, even though the logging string is totally orthogonal to the actual functionality; the "actual" return types line up fine. We still have to do all the plumbing ourselves!

Just like with our error-handling example, we're doing the same thing over and over again in our code. It seems like there should be a way to abstract it away. Can we write a composeLogFun that handles the plumbing for us? Then we could, once again, write our doubleAddTruncate just as simply as function composition.

composeLogFun :: (a -> (String, b)) -> (b -> (String, c)) -> (a -> (String, c))

composeLogFun f g = \x ->

let (log1, x') = f x

(log2, result) = g x'

in (log1 ++ log2, result)

doubleAddTruncate :: Double -> (String, Integer)

doubleAddTruncate =

(doubleIt `composeLogFun` addTwo) `composeLogFun` truncateItThe signatures for our compose functions are looking pretty similar.

compose :: (a -> b ) -> (b -> c ) -> (a -> c )

composeMaybe :: (a -> Maybe b ) -> (b -> Maybe c ) -> (a -> Maybe c )

composeLogFun :: (a -> (String, b)) -> (b -> (String, c)) -> (a -> (String, c))The only thing that's different is how the return types are "wrapped." That is, it seems like this could be an OOP interface or Haskell typeclass, with Maybe and tuples as specific instances. What if we try abstracting over this?

class Mystery ty where

mystery :: (a -> ty b) -> (b -> ty c) -> (a -> ty c)

instance Mystery Maybe where

mystery = composeMaybe

-- You'll need -XFlexibleInstances for this declaration

-- If this looks weird, we're using partial application at the type level

instance Mystery ((,) String) where

mystery = composeLogFunThe compiler accepts this just fine. Success!

This is the point where I make a big show of lifting the curtain on the Wizard of Oz: the mystery typeclass is Monad. Where normal function composition lets us compose normal functions, monads let us compose functions where the return values are "embellished" in some way, whether that's with error handling (Maybe), logging (Writer), side-effects (IO), or loads of other capabilities.

There's just one extra piece needed to write a real Monad instance, which is a function confusingly named return, whose sole purpose is to "wrap" a pure value in a monad context.3

class Monad m where

-- this funky-looking operator is just our mystery function!

(>=>) :: (a -> m b) -> (b -> m c) -> (a -> m c)

return :: a -> m a

-- our functions from before

getUserSunsetTime :: UserID -> Maybe TimeOfDay

getUserSunsetTime =

getUser >=> userBirthday >=> sunsetTime

doubleAddTruncate :: Double -> (String, Integer)

doubleAddTruncate =

doubleIt >=> addTwo >=> truncateItWithin actual Haskell code, you'll likely see the >>= and >> operators more often, but these operators' functionality is just a subset of our >=> above.

(>>=) :: Monad m => m a -> (a -> m b) -> m b

(>>=) x f = ((\() -> x) >=> f) ()

(>>) :: Monad m => m a -> m b -> m b

(>>) x y = x >>= (\_ -> y)That is, >>= (also called "bind") works when you already have a wrapped value to sequence with another function. >> (also called "then") is useful in similar situations, but it completely ignores the return of the first monadic value. It's most useful when working with side effects, where you simply use the first value to do something in the real world, like a putStrLn.

Regardless of these extra subtleties, monads are at their core what we saw with the Maybe and logging examples: a way of sequencing functions with a context more interesting than just chaining function calls. It provides a way to "insert" extra functionality between each function in the chain.4

"That's great and all that we can abstract composition this way, but what's the actual point? What do we actually get from this?"

Good question! After all, just writing the composeMaybe and composeLogFun functions was enough to shorten our code while still giving us the advantages of embellishing our functions. Why do we need this fancy-schmancy Monad concept and its concomitant endless elitism-accusations, math-circlejerking, and mountain of terrible monad tutorials (including this one)?

Just like OOP interfaces, it means that we can write generic code without worrying about the concrete implementation that we're dealing with. For instance, what if we take the venerable map function and make it monadic?

map :: (a -> b) -> [a] -> [b]

mapM :: Monad m => (a -> m b) -> [a] -> m [b]

mapM f [] = return []

mapM f (x : xs) = do

mapped <- f x

rest <- mapM f xs

return (mapped : rest)Let's plug in some actual types for the monad in the type here and see what we get:

mapM :: (a -> Maybe b) -> [a] -> Maybe [b]

mapM :: (a -> (String, b)) -> [a] -> (String, [b])If we plug in Maybe, we end up with a version of map that knows about failure; if the function we give it fails on any of the inputs, it will short circuit and give us back Nothing for a result. If we plug in our logging tuple, we end up with a version of map that collects all the log messages that it gets while running the provided function on each input. We got two very different functionalities from the exact same function definition! And there are many other monads we could use, all of which would change what mapM does into something different and useful. Not bad for such a small amount of code.

Making functions more generic using monads this way has been called the Strategy Pattern for Haskell. Many other monad-generic functions are available in Control.Monad and Control.Monad.Loops.

Another advantage of having the concept of monads is that you need less specific documentation for each library you come across; if you see that some type implements Monad, you already have a lot of information about how to use it. You'll still need to know what the implementation "inserts" between each function call, but oftentimes you can infer what it does from what the library is meant to do.

If you've already written some Haskell code, the operators we've seen so far might not be that familiar, especially the >=> operator. You've probably seen >>= and something called do-syntax; we've even used it above in our definition of mapM. What's going on with that?

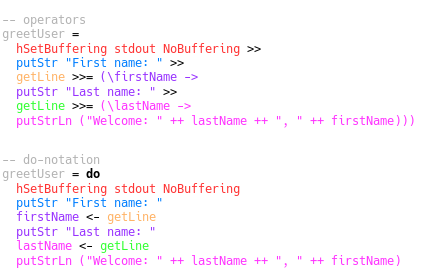

Let's take a look at actually using the >>= operator. Knowing what we now know, and knowing that IO is a monad, let's try writing a quick interactive program:

import System.IO

putStr :: String -> IO ()

putStrLn :: String -> IO ()

getLine :: IO String

hSetBuffering :: Handle -> BufferMode -> IO ()

greetUser :: IO ()

greetUser =

hSetBuffering stdout NoBuffering >>

putStr "First name: " >>

getLine >>= (\firstName ->

putStr "Last name: " >>

getLine >>= (\lastName ->

putStrLn ("Welcome: " ++ lastName ++ ", " ++ firstName)))This works; you can put this in GHCi and run it just fine. But you can see that it's not exactly the prettiest thing. Having to nest new anonymous functions everytime we want to use the return value of the previous step gets annoying, and the formatting is a mess.

Do-syntax is a convenient syntactic sugar that emits exactly the same operators as above. For instance, the equivalent program written using do-notation would be:

greetUser :: IO ()

greetUser = do

hSetBuffering stdout NoBuffering

putStr "First name: "

firstName <- getLine

putStr "Last name: "

lastName <- getLine

putStrLn ("Welcome: " ++ lastName ++ ", " ++ firstName)You can see that it's just a little bit cleaner than writing it with operators. However, underneath the hood, the entire do block is just an expression that produces a value of the given monad type, which means that you're free to mix and match operators and do syntax. It behaves exactly as you would expect any other expression would.

greetUser :: IO ()

greetUser =

hSetBuffering stdout NoBuffering >>

putStr "First name: " >>

(do firstName <- getLine

putStr "Last name: "

lastName <- getLine

putStrLn ("Welcome: " ++ lastName ++ ", " ++ firstName))Here's a diagram showing how the desugaring corresponds to the operator-based definition:

With that, you should hopefully have enough understanding of monads to write your own programs. While there are still lots of abstractions built on top of this, like monad transformers, effect stacks, and so on, everything comes back to these basics of monad composition.

This monad tutorial is heavily inspired by Bartosz Milewski's post on Kleisli categories.

Found something confusing in this post and had a question? Got a comment? Talk to me!

You might also like

Before you close that tab...

Want to write practical, production-ready Haskell? Tired of broken libraries, barebones documentation, and endless type-theory papers only a postdoc could understand? I want to help. Subscribe below and you'll get useful techniques for writing real, useful programs straight in your inbox.

Absolutely no spam, ever. I respect your email privacy. Unsubscribe anytime.

Footnotes

↥1 We’ll ignore how we’re actually getting this data about our users. Perhaps we have an in-memory cache of user data.

↥2 Day and TimeOfDay are provided by the time package.

↥3 Yes, I know the connotations of that word in imperative languages makes its choice somewhat unfortunate in Haskell. Here, it’s just a normal function, and doesn’t do anything strange to flow of control.

↥4 This is why you might have heard them called “programmable semicolons” before.